Safe-sec-visor Architecture for Cyber-Physical Systems

The past few decades have witnessed increasing demands in employing many (potentially) high-performance artificial intelligence-based (a.k.a. AI-based) controllers, in Cyber-Physical Systems (CPS) for complex missions (e.g., autonomous driving). Nevertheless, incorporating these controllers presents significant challenges in maintaining the safety and security of CPS. Meanwhile, modern CPSs, such as autonomous cars and unmanned aerial vehicles, are typically safety-critical and prone to various security threats due to the tight interaction and information exchange between their cyber and physical components. Therefore, the lack of verification for AI-based controllers could result in severe safety and security risks in real-world CPS applications.

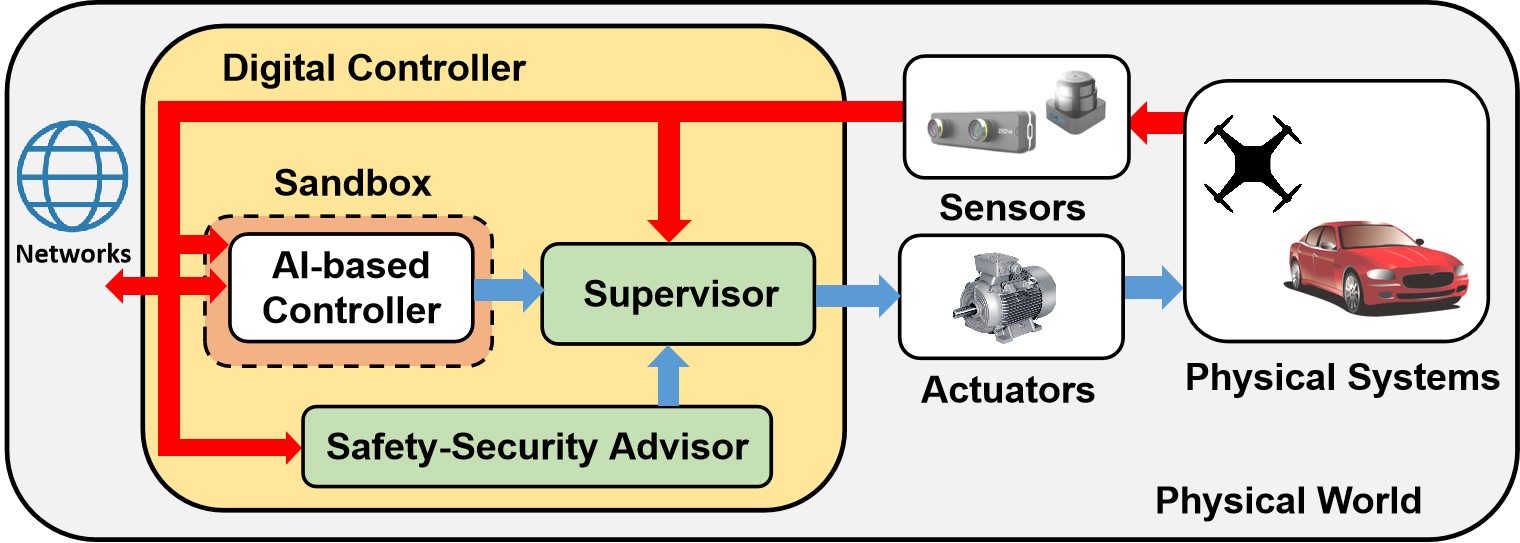

To address these concerns, this project introduces a system-level, secure-by-construction framework, called Safe-sec-visor architecture, which is designed to sandbox AI-based controllers at runtime, thereby enforcing the overall safety and security of physical systems. This architecture primarily comprises two components: a safety-security advisor and a supervisor. The supervisor's role is to check inputs from the AI-based controller, allowing them only if they do not compromise the system's safety or security. In cases where the AI-based controller's input is deemed unsafe or insecure, the safety-security advisor steps in, providing an alternative control command to ensure the overall safety and security of the system. It is important to note that the safety-security advisor is employed only when necessary. Therefore, the proposed architecture allows exploiting the functionalities of AI-based controllers while without requiring any formal guarantees over these controllers.

Highlights of the results:

The design of the proposed architecture is independent of the construction of unverified controllers so that it is applicable to sandbox arbitrary types of unverified controllers.

The proposed architecture could enforce high-level logical safety properties modeled by deterministic finite automata over systems modeled by discrete-time non-cooperative stochastic games with continuous state and input sets.

The proposed architecture could handle both safety and security properties simultaneously.

Related papers

B. Zhong, S. Liu, M. Caccamo, and M. Zamani, Towards trustworthy AI: Sandboxing AI-based unverified controllers for safe and secure Cyber-Physical Systems, In: Proceedings of 62nd IEEE Conference on Decision and Control (CDC), pp. 1833-1840, 2023.

B. Zhong, H. Cao, M. Zamani, and M. Caccamo, Towards safe AI: Sandboxing DNNs-based Controllers in Stochastic Games In: Proceedings of the 37th AAAI Conference on Artificial Intelligence, Vol. 37 No. 12, pp. 15340-15349, 2023.

B. Zhong, A. Lavaei, H. Cao, M. Zamani, and M. Caccamo. Safe-visor architecture for sandboxing (AI-based) unverified controllers in stochastic Cyber-Physical Systems, In: Nonlinear Analysis: Hybrid Systems, 43C. December 2021 (Preprint)

A. Lavaei, B. Zhong, M. Caccamo, and M. Zamani. Towards trustworthy AI: safe-visor architecture for uncertified controllers in stochastic cyber-physical systems, In: Proceedings of the International Workshop on Computation-Aware Algorithmic Design for Cyber-Physical Systems, ACM, 2021

B. Zhong, M. Zamani, and M. Caccamo. Sandboxing controllers for stochastic cyber-physical systems. In: 17th International Conference on Formal Modelling and Analysis of Timed Systems (FORMATS), Lecture Notes in Computer Science (VOL 11750), 2019.

Related talks

Presentation in CAADCPS Workshop, CPS-IoT Week 2021 (Link: YouTube)